Why Software Breaks

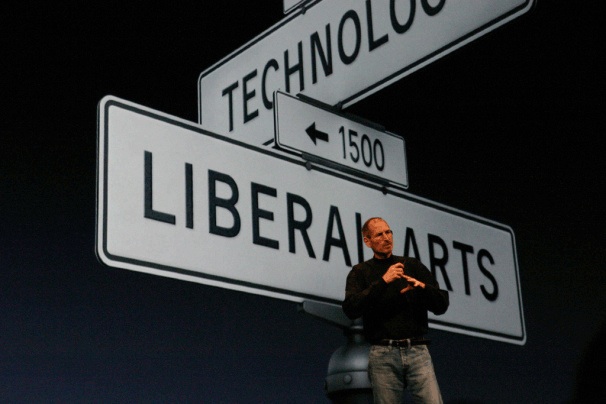

PostedIn Steve Jobs’ March 2011 keynote, he shared his thoughts on Apple, technology & the humanities, all wrapped up in this frequently cited quote about how he viewed Apple at the intersection between technology and the liberal arts…

“It is in Apple’s DNA that technology alone is not enough— it’s technology married with liberal arts, married with the humanities, that yields us the result that makes our heart sing.”

Steve Jobs, March 2011

Steve wasn’t speaking entirely in platitudes here. His thoughts were about Apple and the iPad and its place in the post-PC world. But unless you’ve followed Apple for a while or thought about it, this idea of aligning Apple with “technology + liberal arts” has gone over most peoples heads.

Here’s more of what he said (emphasis mine):

“… a lot of folks in this tablet market are rushing in and they’re looking at this as the next PC. The hardware and the software are done by different companies, and they’re talking about speeds and feeds just like they did with PCs. And our experience and every bone in our body says that that’s not the right approach to this. […] And we think we’re in the right track with this. We think we have the right architecture, not just in silicon but in the organization to build these kinds of products.

Software Failure Modes

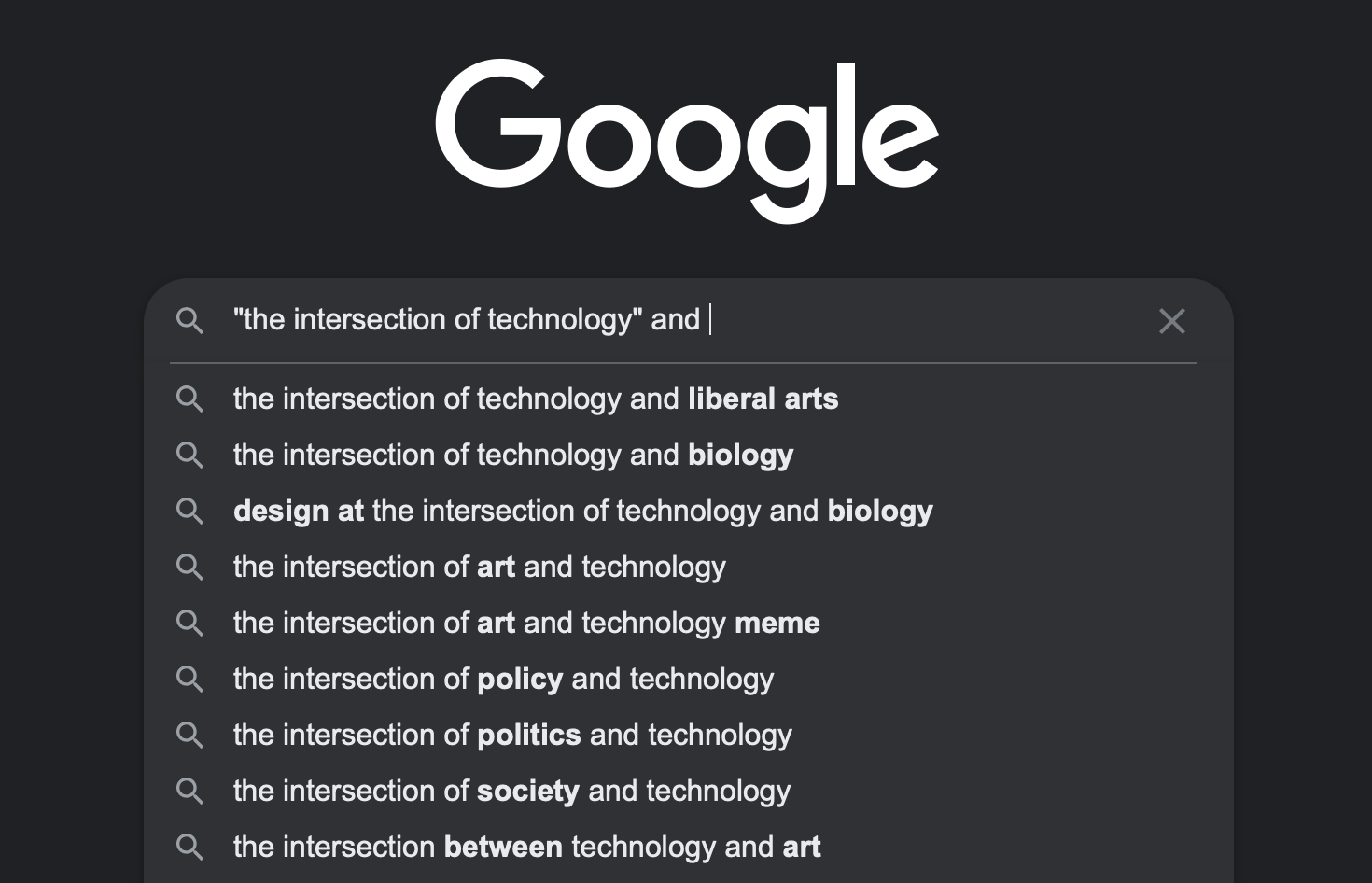

As spot-on as Steve Jobs was about Apple, others associating the word “technology” with some seemingly-orthogonal concept has become a common trope, as Google search can attest (try searching for “intersection of technology and..” to see what I mean). When organizations talk about the failure of technology to produce desired outcomes (aka “when things break”), they start with the broadest of notions yet assume that drilling down to a singular failure point will inherently demonstrate the best result. As much as companies say they place a premium on investing in their people (i.e., “technology + people”), as soon as something goes wrong, it’s either a technology problem or a people problem.

The problem with this approach is how it ignores the fact that many causes of failure are rooted in the interplay of technology and people.

The intersection of technology and..

Case in point: When is a one-line config change, not a one-line config change? Answer: When it runs your test suite against the production DB.

As the example above suggests, test suites don’t magically run themselves against production environments. Think about all the decision-making (or lack of decision-making) that goes into a one-line change with consequences as severe as dropping the production DB before a test run (and not being aware that it’s the production database).

There’s an assumption that successful outcomes result from holistic endeavors, but failure modes can be reduced to a single root cause. Just think about how crazy that sounds.

Some issues are simple cause & effect that can be reversed: find the reason, and undo its effect. But catastrophic failures can have their “root cause” in technology selection, operations, vendor management, or a slew of choices (organizational as well as technical) and how they combine. Two completely reasonable decisions can inexorably combine to produce a batshit crazy outcome. It happens so often that we almost don’t see it.

It’s not always about technology either– in fact, technology is almost always ancillary to the actual issue. To take an extreme example, let’s get real. Let’s talk about Digg.

Digg

In 2010, Digg underwent a site redesign, promptly losing a quarter of its audience before being written into Web 2.0 obsolescence. For those who weren’t around then, it was a mild shit-show. Companies pondering a redesign at the time freaked out. The new site design itself wasn’t bad per se, but it, combined with the notion that Digg’s audience would accept the change, was such a lousy misstep; the controversy and technical glitches had an outsized impact. People left the site en masse.

A post-mortem on how a buggy migration from MySQL to Cassandra caused the company tank would make zero sense. The redesign itself was not a single cause. To fully understand the problem, one must appreciate Digg’s product org, decision-making capability (or lack thereof), and marketing departments to understand what happened.

Not that it mattered for Digg anyway: once you lose a quarter of your market at the same time Facebook began its incredible ascendency, the game is over, and you don’t get a second chance.

Thankfully for the rest of us, failure modes we experience do not pose as existential a risk. Maybe your payment integration goes down for an hour, or your date-time logic doesn’t account for daylight savings. Perhaps your customers get kicked out of a live-chat room due to an AWS outage. These may be painful, but the business can usually recover quite quickly.

So why does software break?

The short answer: entropy. The long answer is that things break because that’s their default state. The default state of any complex system is defined by its failure modes.

You can invest more time, energy, or people in accounting for every possible failure mode. Still, once you account for prominent failure modes (e.g., “Do we even have backups?”), there are diminishing returns. Just ask any company which had an impossible situation happen (e.g., a hard delete of customer data or failure of a DR plan to materialize when needed).

Everyone’s software breaks eventually.

The source of most cases is only partly technical – more is often needed to make organizations more “fault-tolerant.” Even with a culture built around “blameless” incident reviews, or automated collection of post-mortem data, looking for the single cause of an outage incentivizes people to find needles in haystacks. This risks having a culture of avoidance when it comes to having honest conversations (e.g., Who makes decisions? How do we onboard people? How does inter-department coordination help/hamper the impact of these issues?)

The intersection of… (@fin777)

Given that everyone’s software breaks (yes, even yours), thinking about resilience is a more satisfying and grown-up way to approach things anyway. Looking at graphs, pretending humans are an inert component of all these failure modes can only get you so far.

You can never predict all the possible ways a system will fail, but time spent understanding how that system intersects with the organization can provide much better outcomes.

Regardless of why your software breaks, it’s likely the systems your organization has in place for learning from incidents are optimized around the wrong thing: finding a singular cause behind failures. While that approach may be effective in the narrowest cases, I’d argue those cases are usually not of interest to the business nor impactful toward understanding your next failure mode.

These “important” failures should concern themselves with the intersection of technology and people. And organizations don’t devote as much time to that as they should.